Love is Love, Science is Fake

We are taught to look for universal, scientific answers, even to personal questions. But "dating science" can't actually exist in academia. In its place is a total fraud.

In the last essay, we discussed the dating books full of recipe-like instructions for your romantic life. These recipes don’t really work, even for the men who write them. You need to know how to demonstrate the specific traits most attractive to your dream partner, but all books can offer is advice on generic dateability: have good photos, be nice to the waiter, smile and listen actively… That’s also why these books are all the same (aside from the ones who offer generally terrible advice).

But books are a business, and publishers need to sell generic dating books to people with unique and weird dating lives. They need an ingredient that gives the book authority and general applicability. And so they’ve come up with the high-fructose corn syrup of romantic literature: Science™.

While you were muddling about your provincial dating life, our best researchers have distilled the enduring principles of love in their lab. While you’re texting friends about crushes, clinginess, and booty calls, they charted the formulas of limerence, attachment, and proxemics. They gave a TED talk so erotically edifying the entire front row became pregnant on the spot.

At last, they have refined their grand theory into everyday advice you can use: have good photos, be nice to the waiter, smile and listen actively…

For a scientific finding to improve your dating life upon learning of it, it must satisfy three criteria:

True

News to you

Applicable to your dating life

We assume that if scientists found something, it must be true. If it was published and popularized, it must be new and interesting. Some of it won’t be applicable or actionable for you specifically, but it can’t hurt to learn new true things about dating.

I wish this was the case. It categorically isn’t. Academic research on dating is only able, by its basic structure, to measure variables far removed from real people pursuing real relationships. As a consequence, its findings are either entirely self-evident or entirely fake.

The Circumcision Study Was Retracted for PP Hacking

Dating research generally falls under the umbrella of social psychology, defined as the “study of how thoughts, feelings, and behaviors are influenced by the actual, imagined, or implied presence of others.” This is a solid definition. Not coincidentally, it also covers everything I plan to write in Second Person.

But while I can publish anything I deem true and useful, only a small subset of social psychology findings can be published in a research journal. Only those that adhere to the accepted methodology of social science. Namely: a positive and novel result identified via p-value testing in a closed-end experiment. The experiment yielding this result must generally fall within the time and money budget of a single psych department. There’s almost no dating research that is truly interdisciplinary or that has the resources to track large numbers of people over the many years relationships take to develop. This methodology, required to publish a social psychology paper, is woefully inadequate for discovering true and surprising facts about social psychology.

If a finding is true, the least we can expect is that it will replicate when the same experiment is repeated. In a recent test of findings from top psychology journals, only 36% did. For the Journal of Personality and Social Psychology, it was just 23%. More than three quarters of published results in social psychology aren’t real.

There’s a lot of great writing about the malpractice of social science that lead to this dismal outcome. Back in 1962, a statistician reviewed the p-value methodology used in a psychology journal and concluded that it is as likely to produce false results as real ones. This methodology is credulous enough to prove or disprove anything the author wants to, like the existence of magical extra-sensory perception. On my old blog, I explained how to easily tell which published results are fake at a glance, the mathematics of how this methodology goes haywire, and a fun example of using a study’s own data and methods to prove the opposite of the result it claimed.

Without getting too much into the mathematical weeds, here are the basics of how p-value testing works is totally fucked. The questions we want science to answer are “based on our measurement, is this effect significant?” and “how sure are we of that?” Social science papers don’t answer these questions. Instead, they answer a different, convoluted one: “how unlikely would we be to get this measurement in a world where the real effect was exactly zero?” This “unlikeliness” is called a p-value.

This bait-and-switch hides a bunch of unjustified assumptions (e.g., about the distribution of measurement error). More crucially, it misses the actual point of the investigation. We want to know whether the effect of some variable on the outcome is big or small, consistent or erratic, principal or marginal. We don’t generally care whether it’s likely to be “exactly 0” or could possibly be 0.1 — this difference isn’t any more meaningful than the difference between 2.6 and 2.7 or -1.4 and -1.5. In fact, when you have measurement error you almost never measure “exactly 0” even when the real effect is null. This, combined with some willful carelessness, is what allows the p-value approach to “prove as true” any random thing and its opposite.

The main reason p-values are used is that they can be calculated with a cookie-cutter approach by Microsoft Excel™. This approach doesn’t require doing any math, which suits social scientists just fine (if they knew math, they’d be economists or industry data scientists). It doesn’t require any analysis of context, prior probabilities, or alternative hypotheses. It doesn’t require understanding anything at all.

The formulaic nature of p-valuing was supposed to make it foolproof. Instead, it just makes it easy to fool yourself. The most common way is p-hacking: doing a lot of independent measurements but reporting the “unlikeliness” as if you only did one.

Here’s the intuition: if you threw a pair of dice once and got snake eyes ⚀⚀, you may find it surprising and suspect that the dice could be loaded. Even then, you’d probably want more evidence: the chance of snake eyes on a single roll is 2.7%. Low, but not so improbable it couldn’t be a coincidence. But if you rolled the dice 50 times you’d expect to get snake eyes once or twice — that wouldn’t be a surprise. Getting ⚀⚀ on rolls #8 and #37 isn’t a reason to believe the dice are loaded. If you claim that they are by only reporting these two throws and ignoring the other forty eight, you’re just cherry picking.

A Profile of Trustworthiness

Lest I be accused myself of cherry picking, I googled “famous papers dating psychology”, clicked the first result, and opened the first paper mentioned. Here’s how the paper is described in the article:

…physical appearance is indeed important. A 2008 study in which participants rated actual online profiles confirmed this, but also explored the criteria that made certain photos attractive (Fiore et al., 2008). Men were considered more attractive when they looked genuine, extraverted, and feminine, but not overly warm or kind.

That physical appearance is important in online dating is apparent to anyone with a pulse. Most people would also guess that looking genuine and extraverted makes men attractive, but not because of anything this study shows. That looking feminine, cold, or unkind is attractive for a man would be quite a surprise indeed. In the event, the study doesn’t prove that whatsoever.

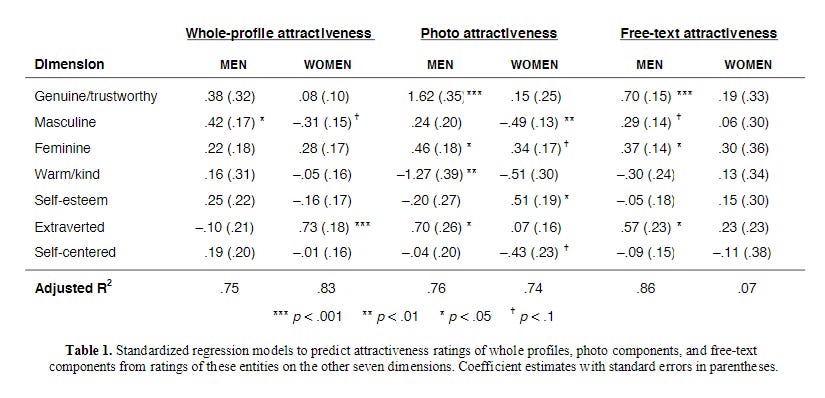

Of course, the researchers don’t have direct access to “trustworthiness” or “attractiveness” or even “men”. All Fiore et al. could do is sit a bunch of undergrads in front of screens and have them rate 50 dating profiles on these dimensions. The results of these ratings are summarized in the table below:

The numbers in the table represent the coefficients, how much the ranking of each dimension like “masculine” or “self-esteem” contributed to the attractiveness ranking of each profile component. The numbers in parentheses are the standard error — how accurately each coefficient was estimated. The asterisks and crosses next to them are a lie.

A cross next to a measurement means that its p-value is smaller than 10%. It also marks the grave of the authors’ sense of shame. A single asterisk means a p-value smaller than 5% — a generous cutoff that is almost twice as high as the 2.7% chance of a snake eyes roll. But there isn’t just a single measurement in the table, there are 42. 42 independent dice rolls. A result marked by a cross or single asterisk is much less significant than the insignificance of getting ⚀⚀ on some rolls out of 42.

Two asterisks mean a p-value smaller than 1%. There are two results like this: masculine-rated photos making women appear less attractive, and warm/kind photos doing the same for men. It’s very probable to get one 1% outcome in 42 trials by sheer luck; even getting two isn’t that unusual. But let us grant that only one of these results is a fluke and the other is “real”. How could we possibly know which one? I have a weak hunch that masculine photos for women are unattractive, and a strong hunch that I need to pour myself a stiff drink before continuing.

We’re left with three measurements that pass the .001 significance threshold. With 42 trials, that’s a reasonable cutoff for seriously considering a result. First: female profiles rated as extraverted were also rated as attractive. No surprise here. Every woman learns that boys like the sociable and popular girls by the time she’s 13, at the latest.

And finally: photos and text that looked genuine/trustworthy were each rated as attractive for men. For some reason, the “whole profile” wasn’t rated as significantly more attractive if it was genuine/trustworthy, even though the whole profile is literally just the “trustworthy” text and “trustworthy” photos put together. What to make of this discrepancy?

Fiore et al. don’t address it at all, but I have a good guess: the subjects can’t actually tell whether someone is genuine/trustworthy from a photo or a snippet of text. But even bored undergrads know that “trustworthiness” is a positive quality to look for men, so they said that photos of attractive men look trustworthy. And then for whole profiles they didn’t, because they’re bored undergrads clicking numbers on a screen and there’s no reason to expect any consistency in their ratings. Garbage in, garbage out.

To summarize: what is plausibly true in this “famous dating psychology paper” isn’t surprising to anyone; what is surprising isn’t true. I felt confident picking a “famous” paper at random for this exercise because I’ve been scrutinizing social science methodology for over a decade. They’re all like this. The problems with p-value testing were pointed out almost a century ago. Its use and misuse in psychology in particular has been criticized for over 60 years. If social psych could have been shamed into changing how it does things, it would have happened a long time ago.

Why would an entire academic discipline stick with an approach that guarantees a majority of its published findings are going to be fake? The reason is simple yet rarely discussed. P-values produce a lot of fake results, but any more rigorous methodology wouldn’t produce any results at all. The core problem of social psychology isn’t the abundance of false positives, but the lack of true findings that aren’t just wrapping utterly obvious things in academic jargon.

Society exists, as does psychology. But, like music, they simply don’t exist inside the social psychology lab.

The Lies in “Operationalize”

The “thoughts, feelings, and behaviors” we have about other people are complex, individual, context-dependent, and dynamic. In contrast, lab experiments are good at measuring the static effects of simple variables that are consistent for different people in different contexts. The attempt to turn complicated concepts (e.g., happiness, attraction, love) into measurable observations (e.g., survey responses on a 5-point scale) is called operationalization. Operationalization is where “love science” dies stillborn.

Here’s an interesting question in dating psychology: are women attracted to jerks? This one I didn’t select at random. It was shouted at me by dozens of “red-pilled” commenters on my old dating posts, men whose entire worldview of “alpha fucks, nice guys finish last” depends on proving that only jerks get laid. When I suggested that this is not the case, as evidenced by millions of sexually active perfectly nice guys, they called me a blue-pilled beta cuck. The more erudite among them called me a beta cuck but also included a link to Carter, G. L. et al. (2013), The Dark Triad personality: Attractiveness to women. “Dark Triad” doesn’t refer to Sauron, Saruman, and the Witch-king of Angmar (who are all objectively attractive). It’s simply the academic euphemism for manipulative psychopathic narcissists.

Mainstream magazines have a natural inclination to say that women aren’t into jerks lest they be perceived as encouraging toxic masculinity or victim-blaming the jerked. And yet, they have also widely reported on the above paper. The NY Post cited Dr. Carter in Why Great Women Marry Total A-holes, a feminist writer quoted him to explain why women find narcissists attractive, and I see it reposted often by otherwise sensible writers.

Here’s the rub: how exactly are “attracted to” and “jerks” operationalized in this famous study? And who exactly are these “women”? Quoting from the paper:

“jerk” = “Two self-descriptions were generated to represent high dark triad and control men. The high DT self-description contained manifestations of the trait descriptors that comprise […]: a desire for attention, admiration, favours, and prestige; the manipulation, exploitation, deceit and flattery of others; a lack of remorse, morality concerns and sensitivity, and cynicism.”

“attracted” = “All participants were then asked a series of questions, answered on a six-point Likert scale. The first pertained to the attractiveness of the individual’s personality”

“women” = “One hundred and twenty eight female undergraduates at a British university, (mean age, 19.4; range, 18–36) participated in the study, conducted via online questionnaire. Participants were given course credit for taking part.”

The study that is widely quoted to have shown that “women are attracted to jerks” actually tested whether 19-year-olds taking an online survey for course credit rated a paragraph describing a fictional “dark triad” man as more attractive on a 6 point scale.

By the way — they didn’t. The teenagers rated the “high DT” paragraph only slightly more attractive, far below the most lenient standards of statistical significance. Even that was likely due to the fact that the “high DT” character was rated as more extraverted and less neurotic, two traits that are actually attractive. Carter et al. only got a publishable “significant” result by running a convoluted regression that omitted some personality traits but included others with no justification, a classic example of the p-hacking we described.

Out of curiosity, I ran my own survey of 1,220 adults — 10 times the sample of Carter et al. — and found no significant impact of (self-reported) dark triad traits taken together on romantic success. I did find that extraversion gets you laid more, which you almost certainly could have guessed.

You Already Know

Is it a good idea to “do your own research”? Depends on the field. Ornithology: knock yourself out. Radiochemistry: better leave it to the experts. Social psychology: you can’t help it, you’ve already been doing it forever.

You know men and can guess which dating app photos they find attractive. You know women and can guess what behavior they’d consider “being a jerk” and whether they find it appealing or off-putting.

You won’t be able to publish this knowledge in the Journal of Personality and Social Psychology. This knowledge is specific to your context: the people you know, the standards of attractiveness and jerkitude they abide by. You can’t prove that what you know generalizes to all people under some standardized operationalization, because it doesn’t.

But if your experience doesn’t generalize to a global truth, there’s also no global truth that particularizes to your experience. There’s no universal law of “women” and “assholes” that specifies how a bunch of undergrads in England will rate paragraphs on a 6-point scale and at the same time will tell you whether acting a little meaner to your hot coworker will make her more receptive to going on a date. These two situations have nothing to do with each other. Calling them both “attraction to assholes” is magical thinking, trying to coerce reality by uttering mere words.

It is perhaps the most pervasive superstition of modernity to reach for some global, general, academic truth to inform personal questions you encounter.

You were taught this superstition in school. It starts innocently enough: ice melts, dogs have four legs. Perhaps you’ve seen a tripod dog slip on a frozen puddle in winter, but you know that’s not what you’re supposed to answer on the quiz. Then it’s stuff like Maslow’s Hierarchy or Dunbar’s Number, delivered in the same authoritative tone as the laws of physics. School trains you to pay less attention to what you notice locally, and more to what you’re told is universal.

People who got As in school are more likely to retain this superstition. They buy books of Science™-based dating advice, books that have zero impact on their dating lives but provide an old, familiar comfort. People who got A+ are at risk of following the superstition to its most tragic outcome: becoming grad students in social psychology.

Most people do develop some skepticism about Facts™ in areas of personal interest, although this mostly just involves sourcing one’s Facts™ from a different supplier than before. Few develop ignorance as a skilled practice, the conscious habit of tuning out the cacophony of facts when you’re faced with a real question.

In that strange factless silence, you can start finding real answers.